Non-discrimination by design

2021

Non-discrimination by design is a guideline to prevent bias in algorithms

Artificial Intelligence replaces and optimizes human choices and decisions. Relying on data, mathematics, and statistics rather than emotions, biases, or moods should make that process more fair. However, the reality has proven otherwise. In practice, digital decision-making often results in the systematic disadvantage, exclusion, or punishment of minorities—whether intentionally or unintentionally by the organizations themselves. Unfortunately, this bias often goes unnoticed by both the victims and the authorities.

This guideline is commissioned by the Dutch ministry of the Interior and Kingdom Relations.

The project is a collaboration between a team of experts from Tilburg University, Eindhoven University of Technology, Vrije Universiteit Brussel and The Netherlands Institute for Human Rights. Designed by Studio Julia Janssen

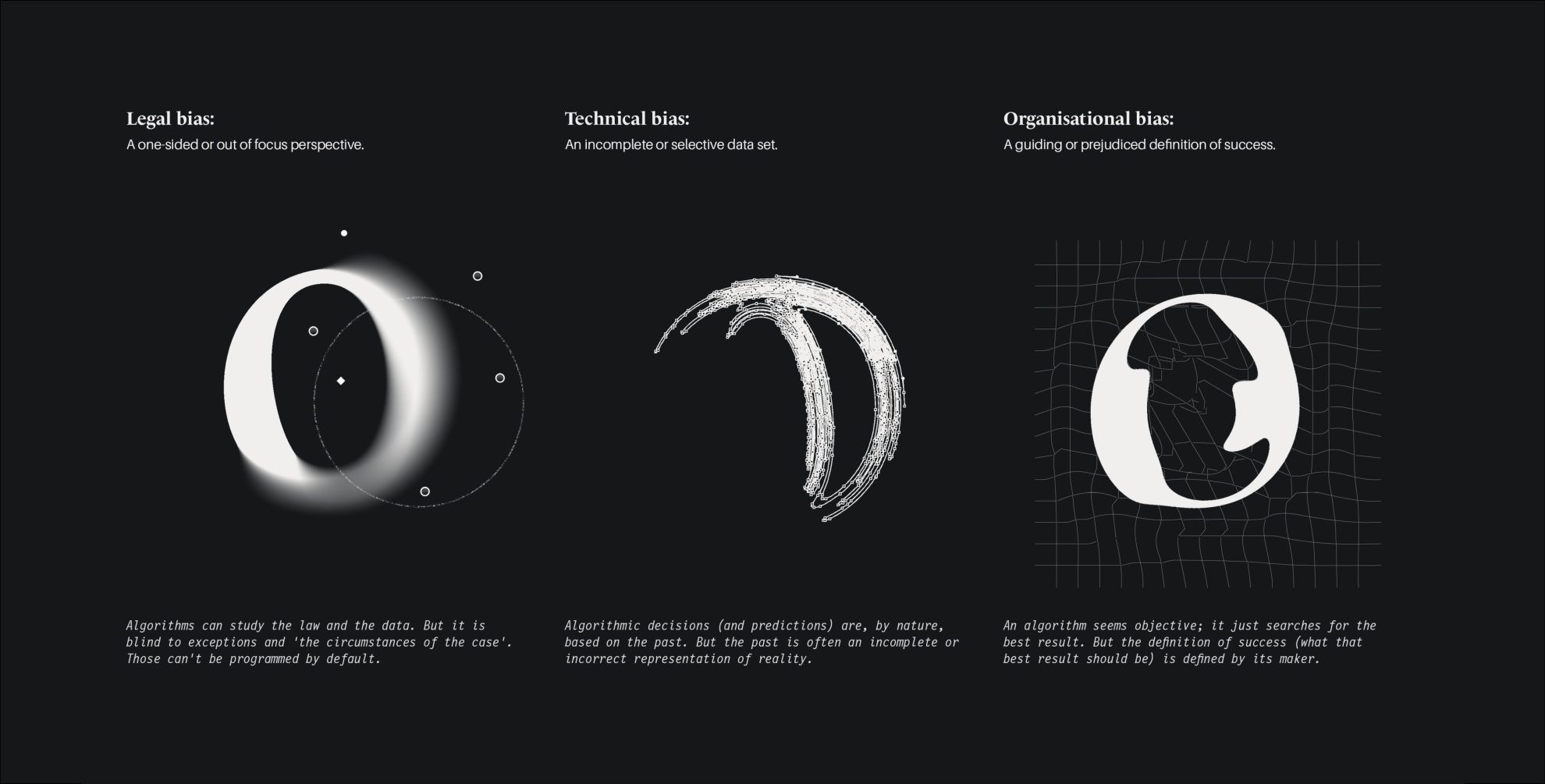

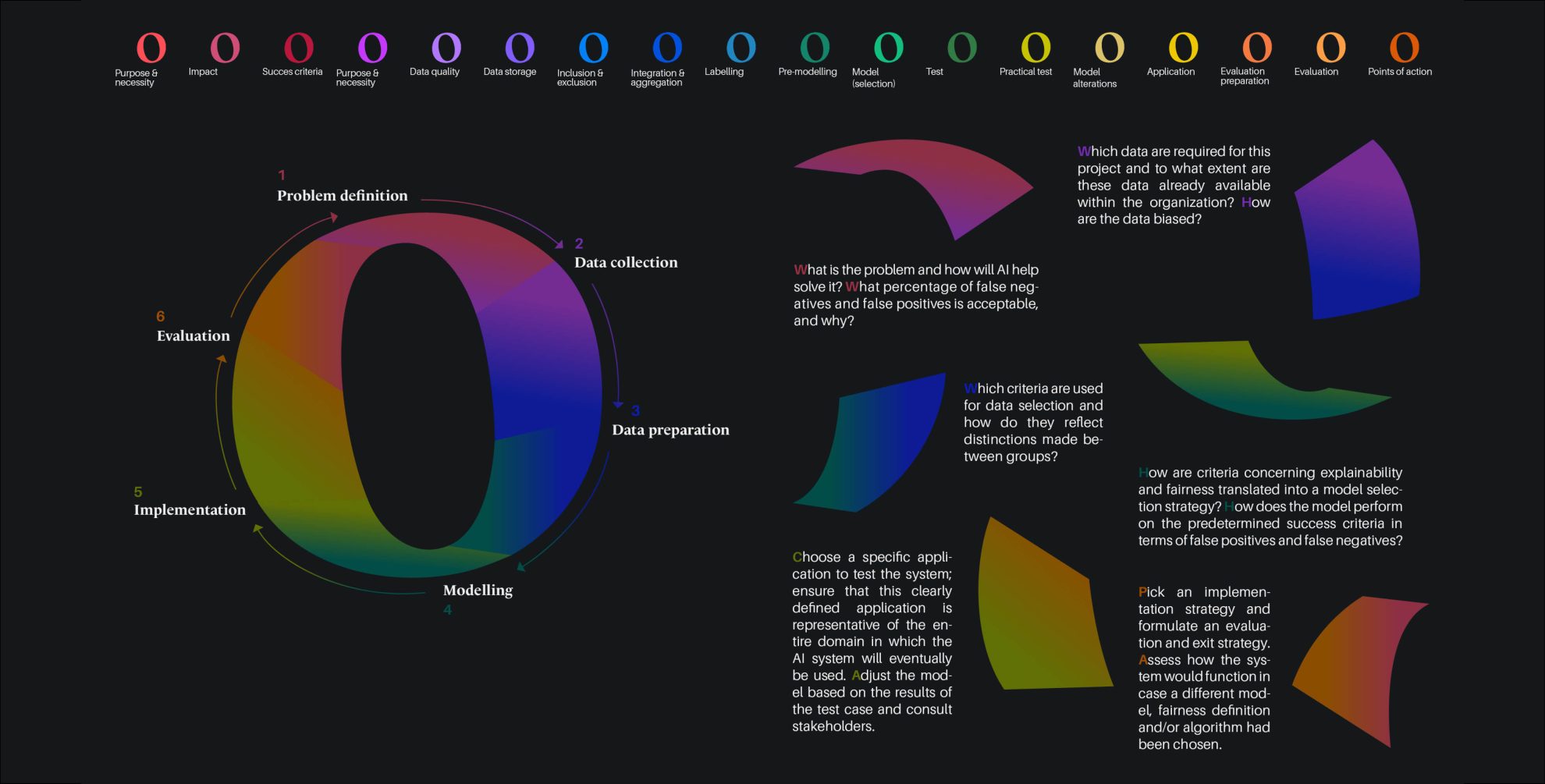

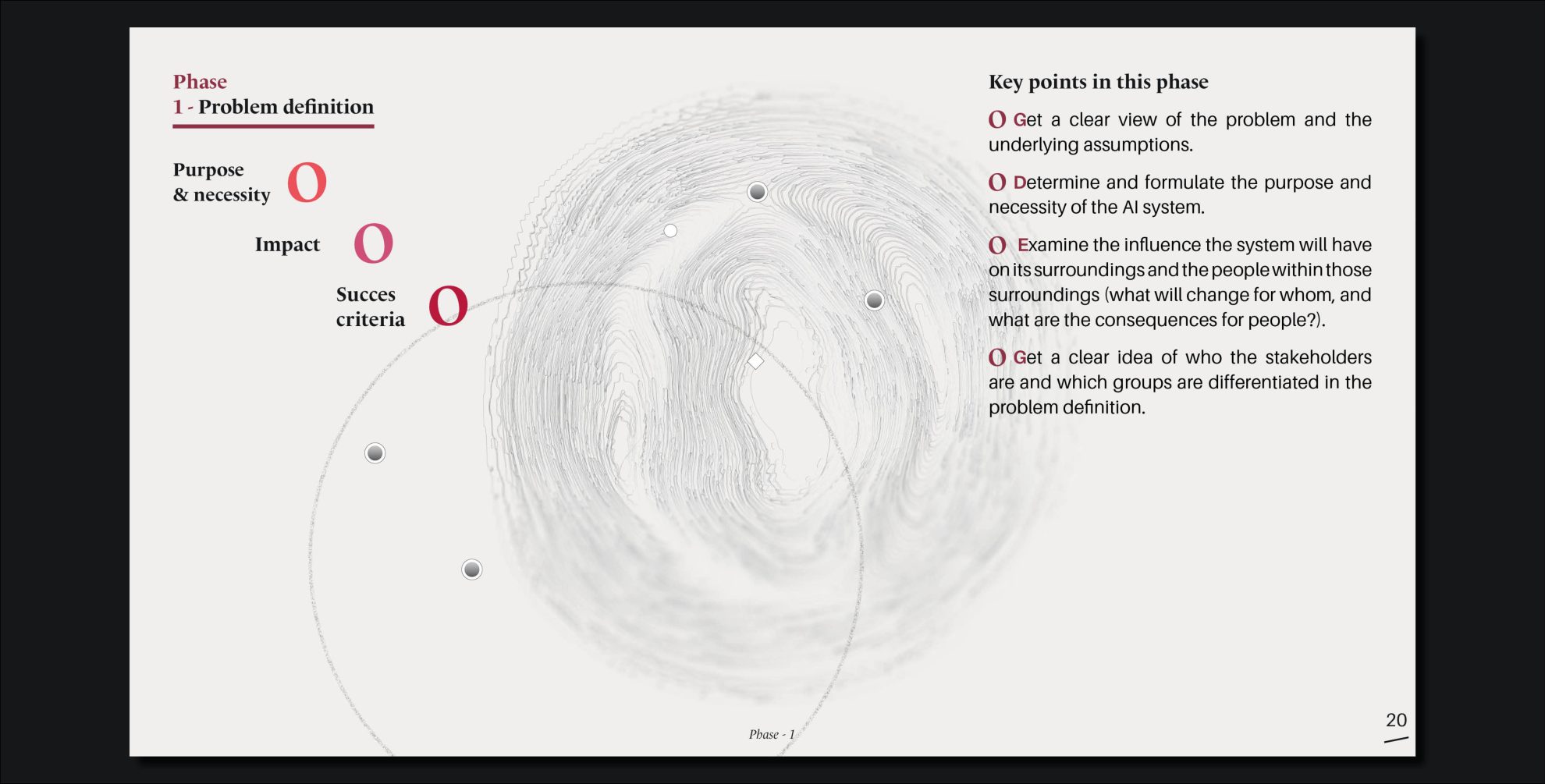

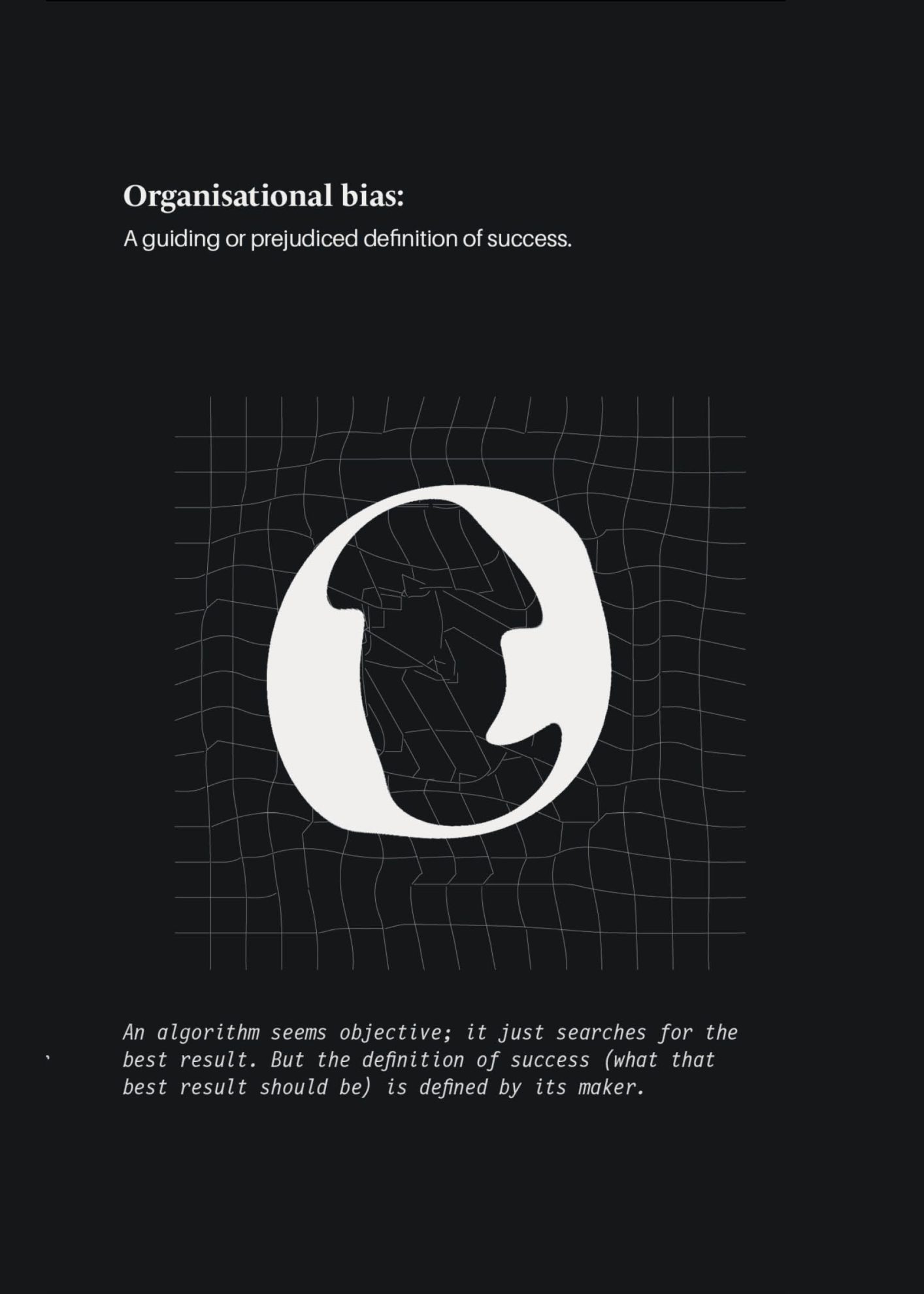

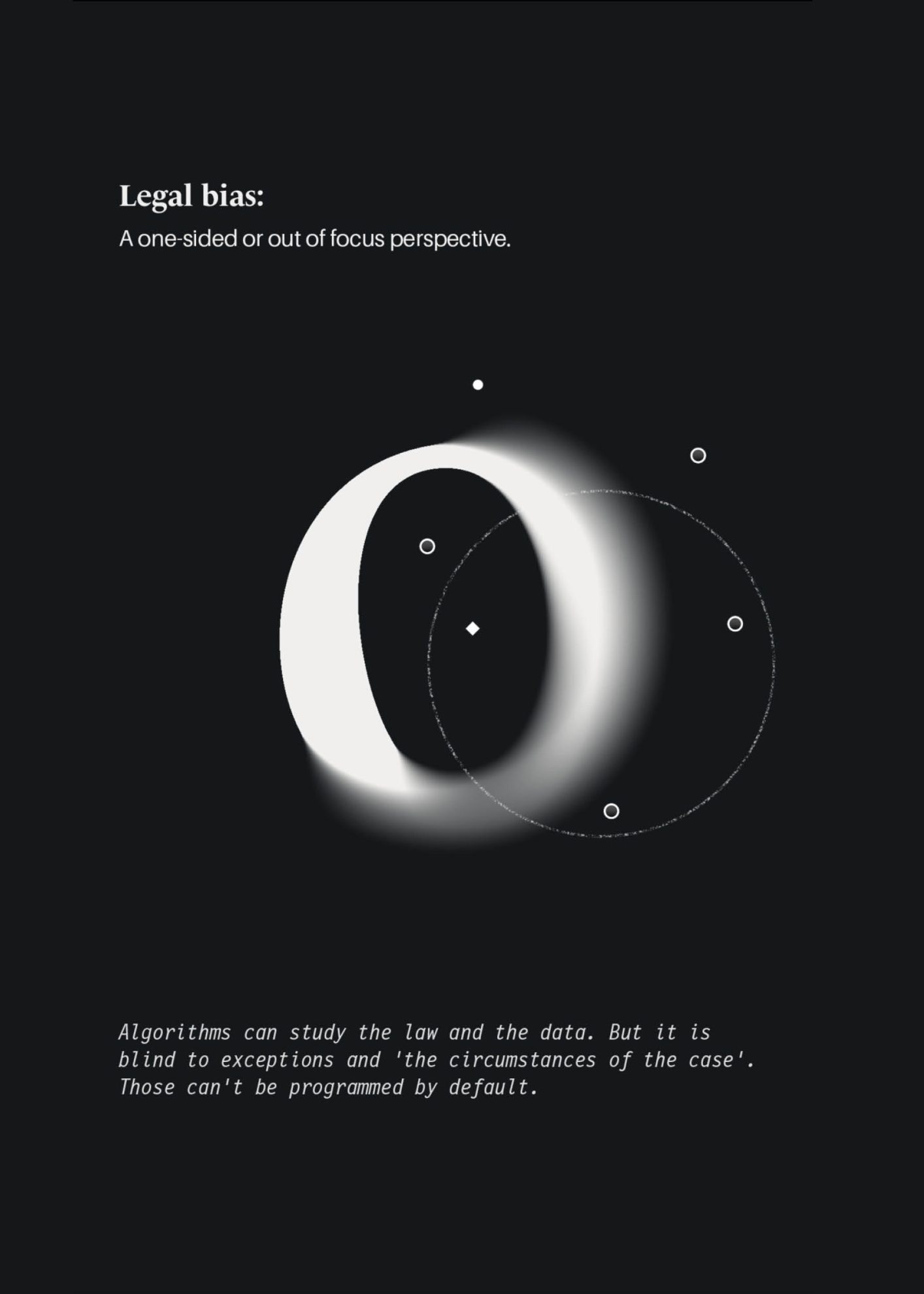

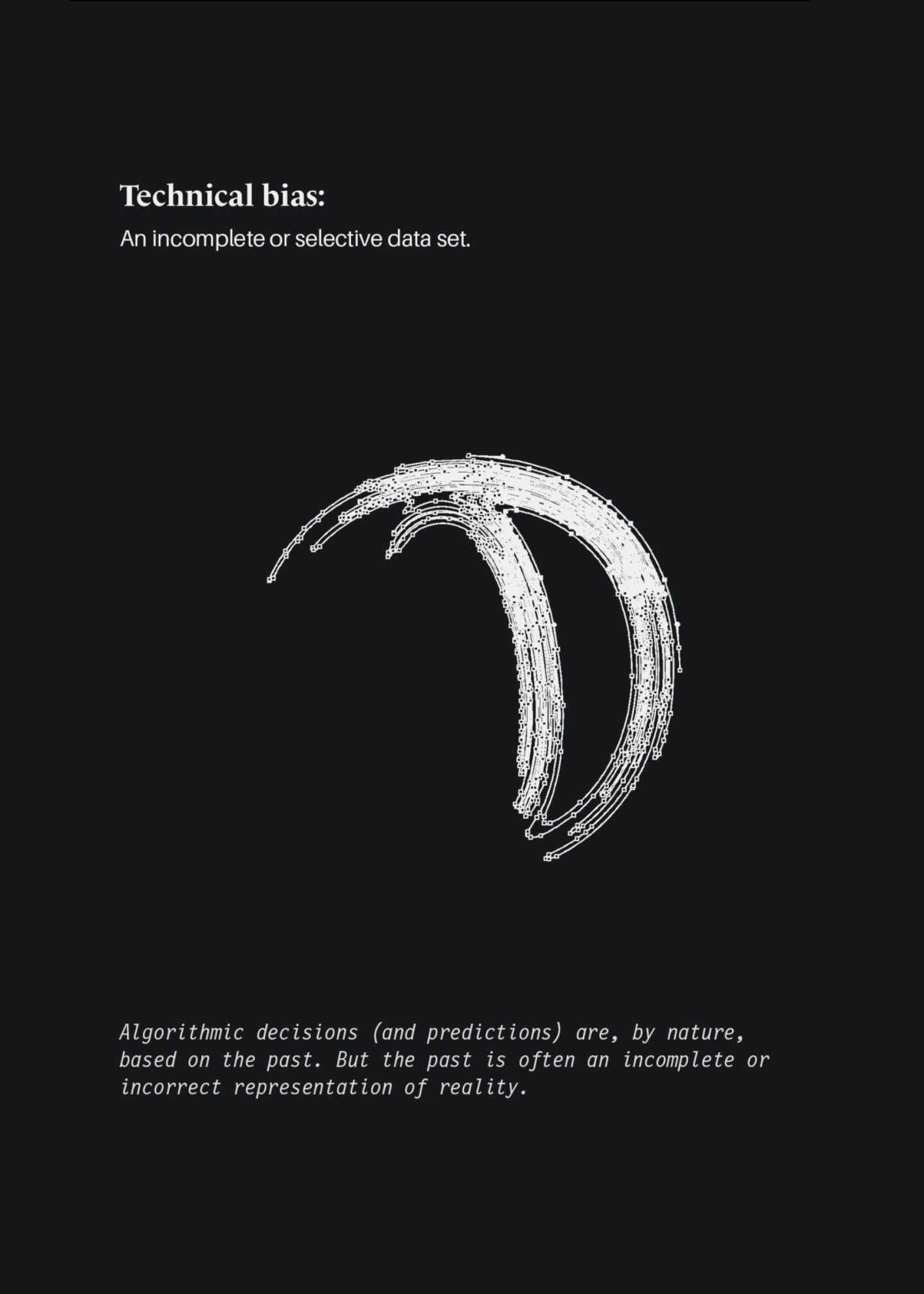

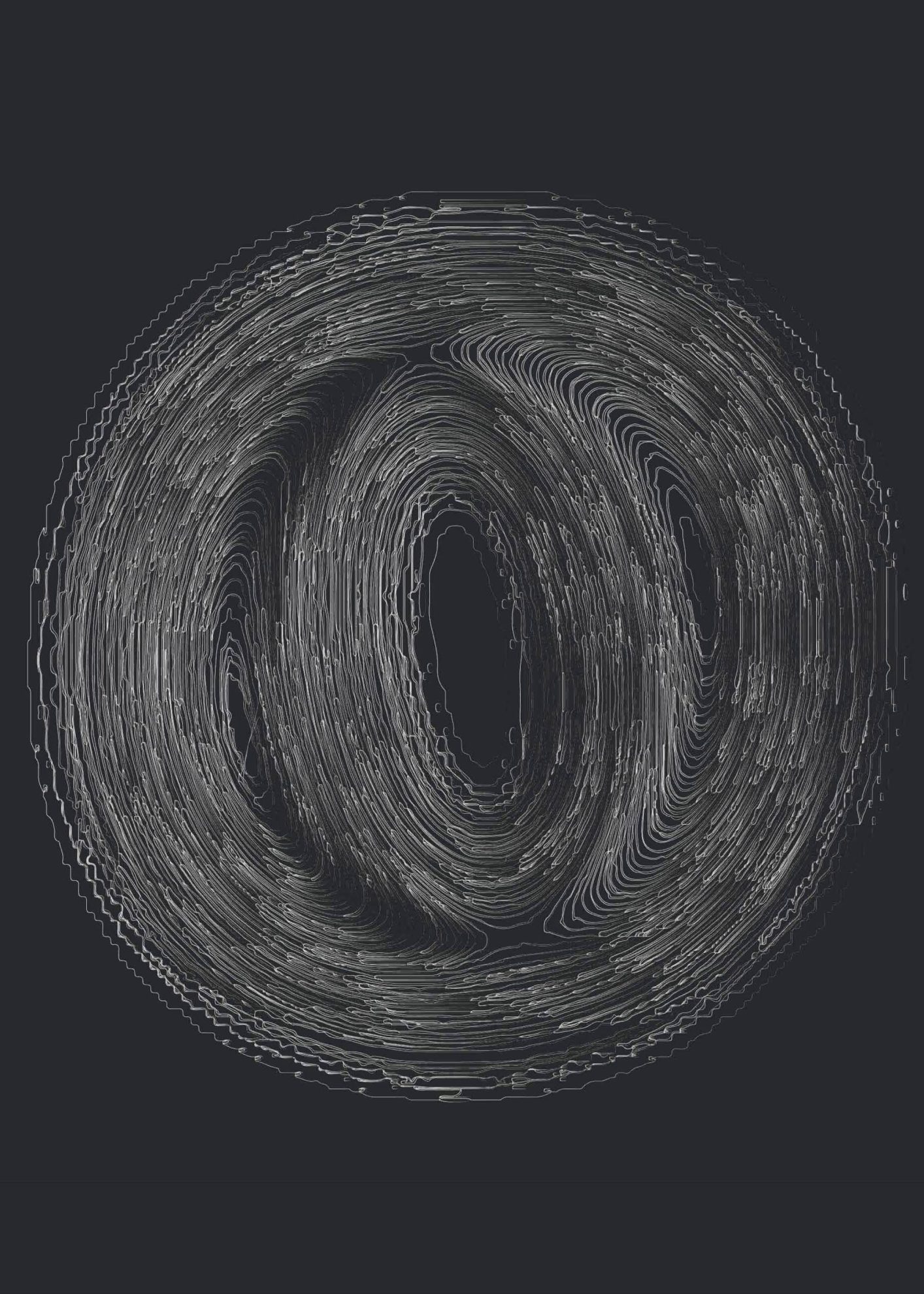

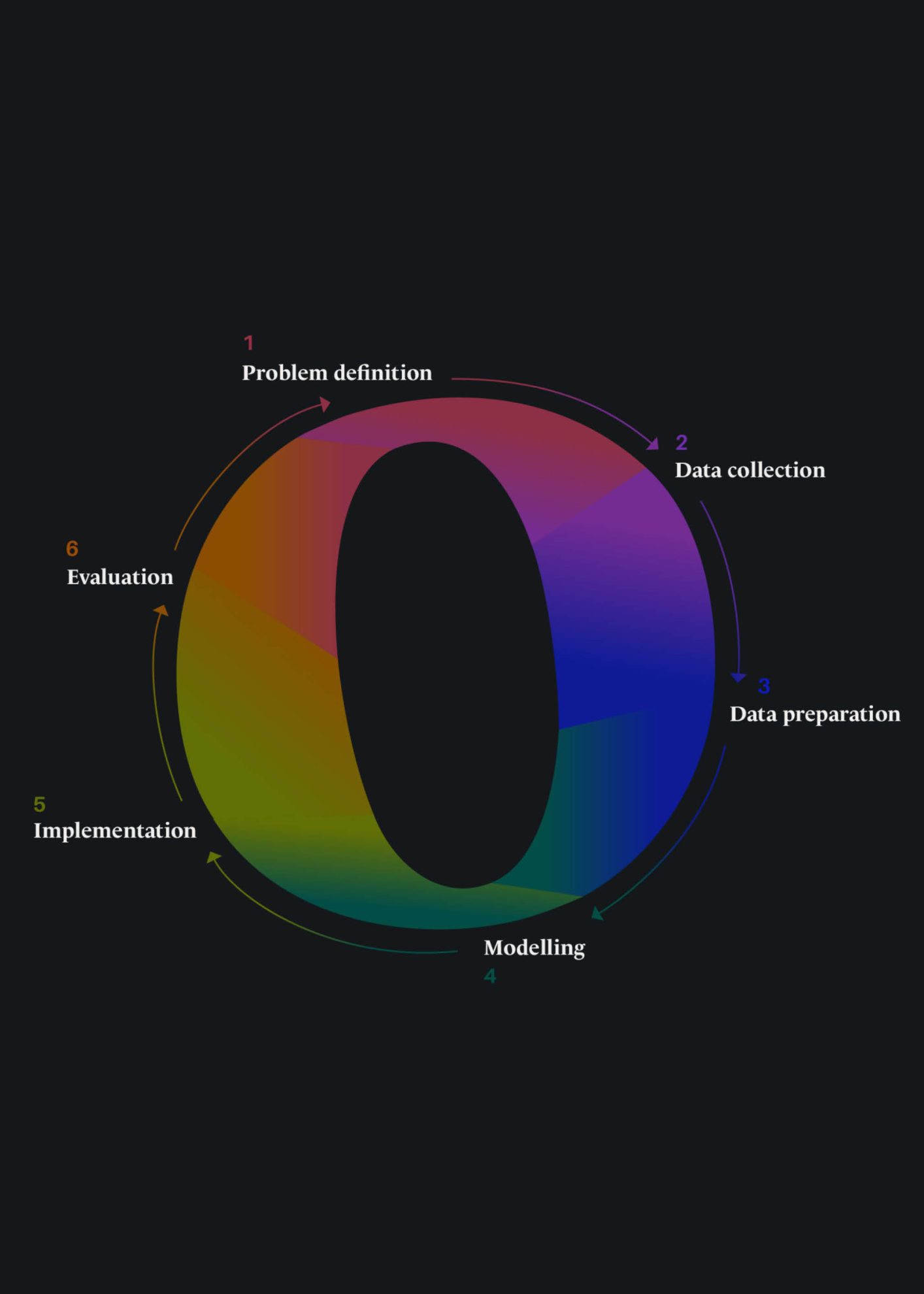

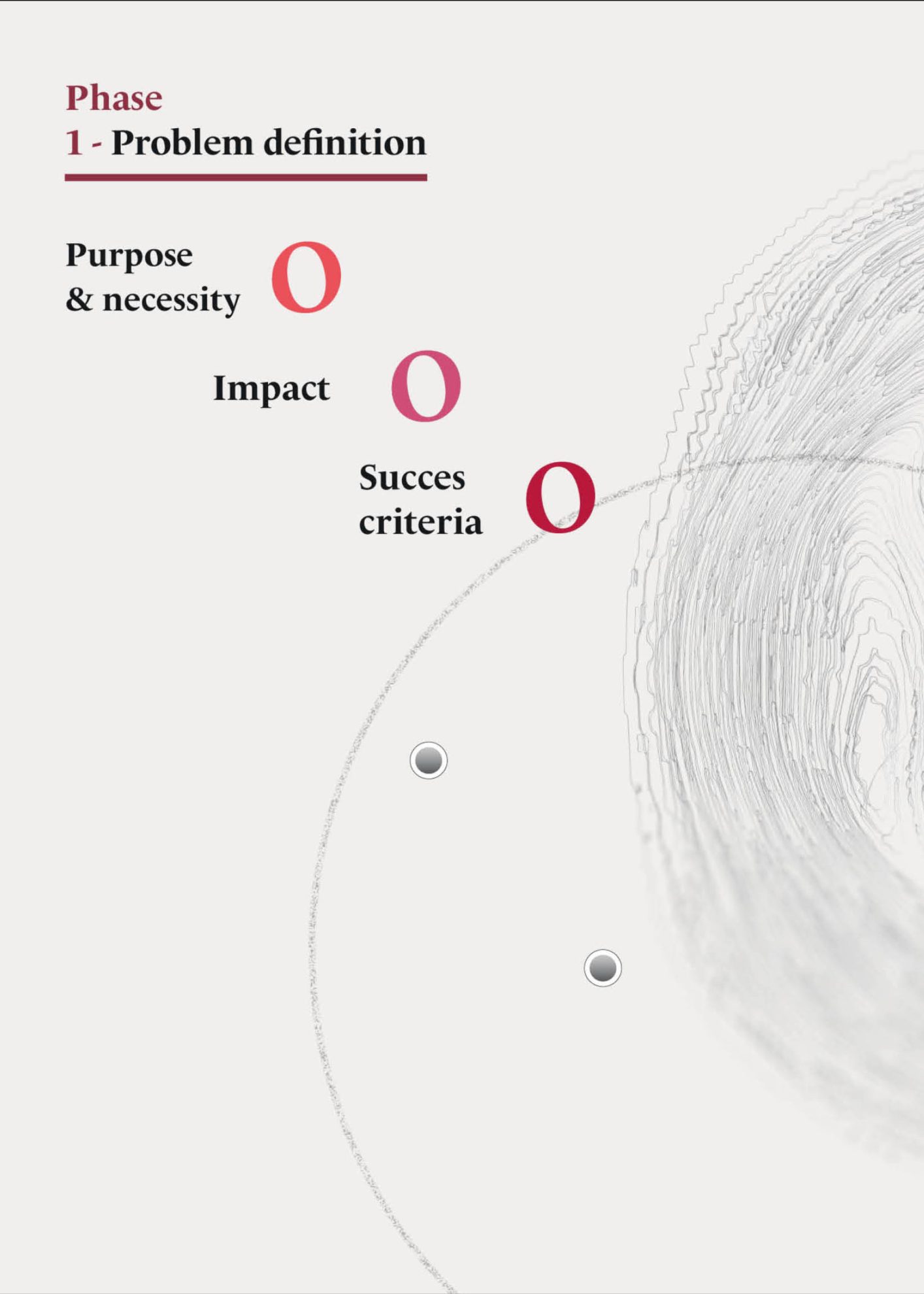

Janssen designed a language to visualize the dangers of algorithmic bias, based on three key conditions: technical (an incomplete or selective dataset), legal (an unclear or one-dimensional perspective), and organizational (a lack of a guiding definition of success). The design features a series of manipulated, biased ‘o’s, which change shape, color, and composition throughout the document. These dynamic elements help to animate the content and guide the reader through the complexities of the legal issues at hand.

2021

Non-discrimination by design is a guideline to prevent bias in algorithms

Artificial Intelligence replaces and optimizes human choices and decisions. Relying on data, mathematics, and statistics rather than emotions, biases, or moods should make that process more fair. However, the reality has proven otherwise. In practice, digital decision-making often results in the systematic disadvantage, exclusion, or punishment of minorities—whether intentionally or unintentionally by the organizations themselves. Unfortunately, this bias often goes unnoticed by both the victims and the authorities.

This guideline is commissioned by the Dutch ministry of the Interior and Kingdom Relations.

The project is a collaboration between a team of experts from Tilburg University, Eindhoven University of Technology, Vrije Universiteit Brussel and The Netherlands Institute for Human Rights. Designed by Studio Julia Janssen

Janssen designed a language to visualize the dangers of algorithmic bias, based on three key conditions: technical (an incomplete or selective dataset), legal (an unclear or one-dimensional perspective), and organizational (a lack of a guiding definition of success). The design features a series of manipulated, biased ‘o’s, which change shape, color, and composition throughout the document. These dynamic elements help to animate the content and guide the reader through the complexities of the legal issues at hand.